MR images of real -time verbal paths and text information spoken

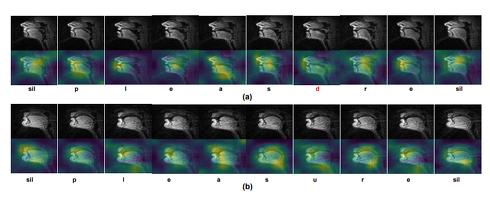

"Silent Speech and EMOTION RECOGNICT SHACT SHACT SHACT SHACT SHACT SHACT SHAMICS IN Real-Time Mri" developed by a research team at California Merced, the Real-Time MRI is a Real-time phrase (no voice speech). It is a deep learning framework that acquire, understand acoustic information from that movement, and convert it to text. [Image] (Up) The Viitenium that displays the MR image of the vocal path, (down), (lower), which shows the boundary of the vocal path, refers to the cavity area that passes through the sound made by the vocal cords. In this study, we observed this ventricicle using RTMRI and verify that continuous audio can be recognized from the acquired movement. For continuous voice recognition, we have built an end-to-end recognition model (sound-acoustic mapping) by deep learning that can automatically estimate acoustic information corresponding to a specific ventilation configuration. In order to understand whether emotions affect the sound movement at the time of voice generation, and how they affect them, each sub -area (pharyngeal part, palate, back, hard lip, lip stenosis, lip stenosis) Analysis of changes in the sound shape in part) was performed according to different emotions and gender. The recognition model of this study consists of two submodles. This is a sequence modeling module that receives a video frame sequence taken with RTMRI, outputs a feature vector for each frame, and inputs a sequence of the feature vector for each frame, predicts one character, and outputs. The dataset uses more than 4,000 videos of 10 speakers. Includes the MR image of the vocalization formation, the voice record synchronized with it, and the transcriptions at the word level that matches time. In order to measure the performance of the proposal model, calculate the sound error rate (PER), character errors (CER), and word errors (WER), which are standard evaluation indicators for the performance of the automatic audio recognition (ASR) model. Verified. As a result, the average PER is 40..While keeping it to 6 %, he demonstrated that the sequence of the vocalization formation can be automatically mapped to the entire sentence. Furthermore, an analysis of emotion (neutral, happiness, anger, sadness) is performed, and in the case of non -neutral emotions, the sub -areas of the lower border of the Viewing are more prominent than the sub -region of the upper border. It turned out that there was a tendency to change. The changes in each sub -area are also affected by gender fluctuations, and female speakers indicate that the pharyngeal regions and the distortion of the corps and the cracks are larger than the positive emotions. rice field. This result may be used as an input medium with this recognition model used on a daily basis, and when a person with linguistic disabilities, a person with a voice disorder, or visually impaired interacts with a computer. It suggested that it could help. Source and Image Credits: Laxmi Pandey and Ahmed Sabbir Arif, “Silent Speech and Emotion Recognition from Vocal Tract Shape Dynamics in Real-Time MRI”, SIGGRAPH '21: ACM SIGGRAPH 2021 Posters, August 2021, Article No.: 27, Pages 1-2, https: // doi.Org/10.1145/3450618.3469176 * Mr. Yuki Yamashita, who presides over the web media "SEAMLESS", introducing the latest research on technology.Mr. Yamashita picks up and explains a highly new nature paper.

ITMEDIA NEWS